How to analyse FN / FP on detection annotations

About 1 min

How to analyse FN / FP on detection annotations

What are FN / FP?

In the field of computer vision, FN (False Negative) and FP (False Positive) are two important concepts used to evaluate the performance of a model.

- FN(False Negative):the model's failure to correctly identify the presence of an object or feature in an image.

- FP(False Positive):the model's error of identifying non-existing objects or features as present in an image.

During the training process of deep learning models, both FN and FP have a significant impact on the model's performance, making them important indicators for model evaluation and optimization.

Prerequisites

- Importing Ground Truth results into the dataset.

- Importing user's predicted results set into the dataset.

- Currently, only the Detection annotation type is supported for FN / FP analysis.

Usage

Enter analysis mode

- Click on the 'Analysis' button in the top menu bar.

- "Select the predicted results set that needs to be compared, and click on the 'Analyze FN / FP' button to enter analysis mode.

Analyse

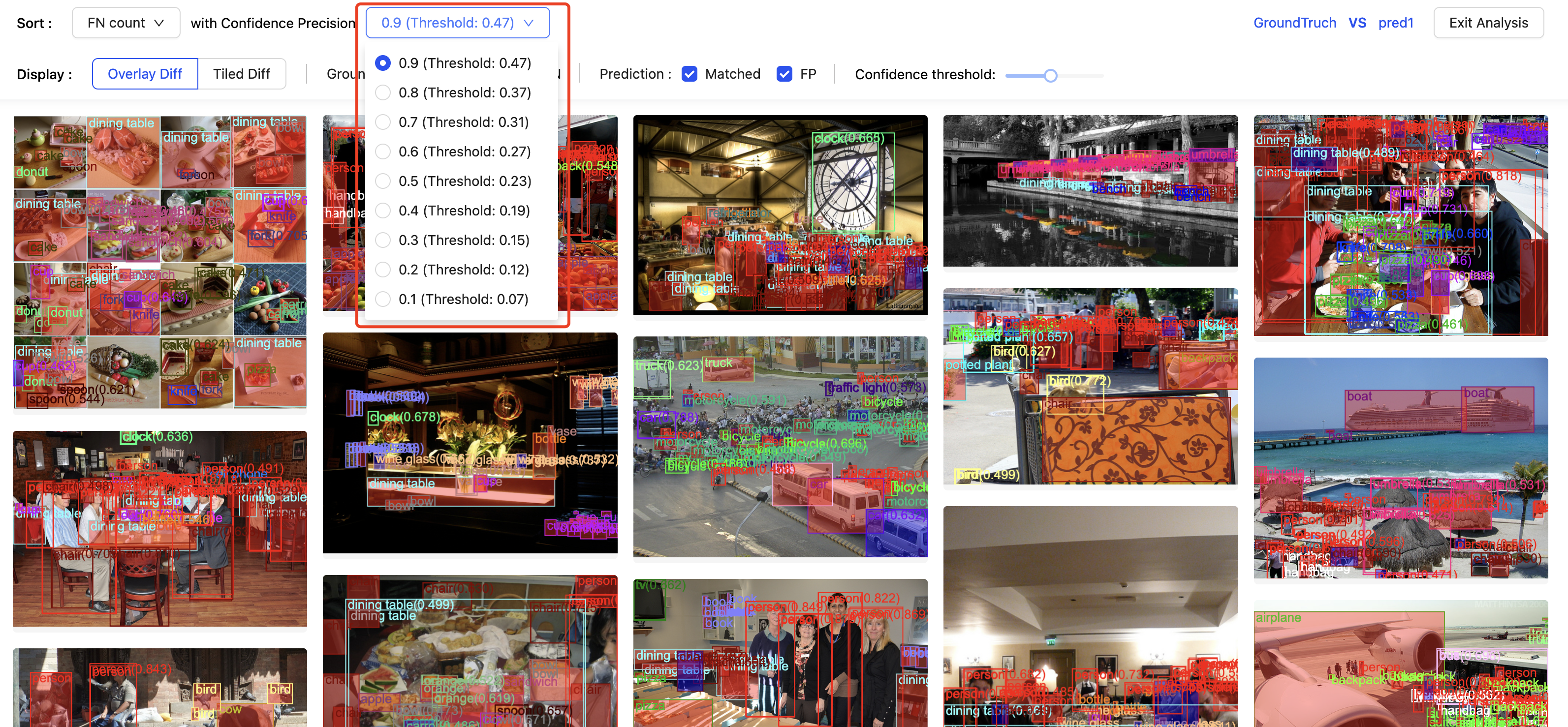

The system will pre-calculate the confidence threshold and corresponding FN / FP results under 0.1~0.9 Precision conditions.

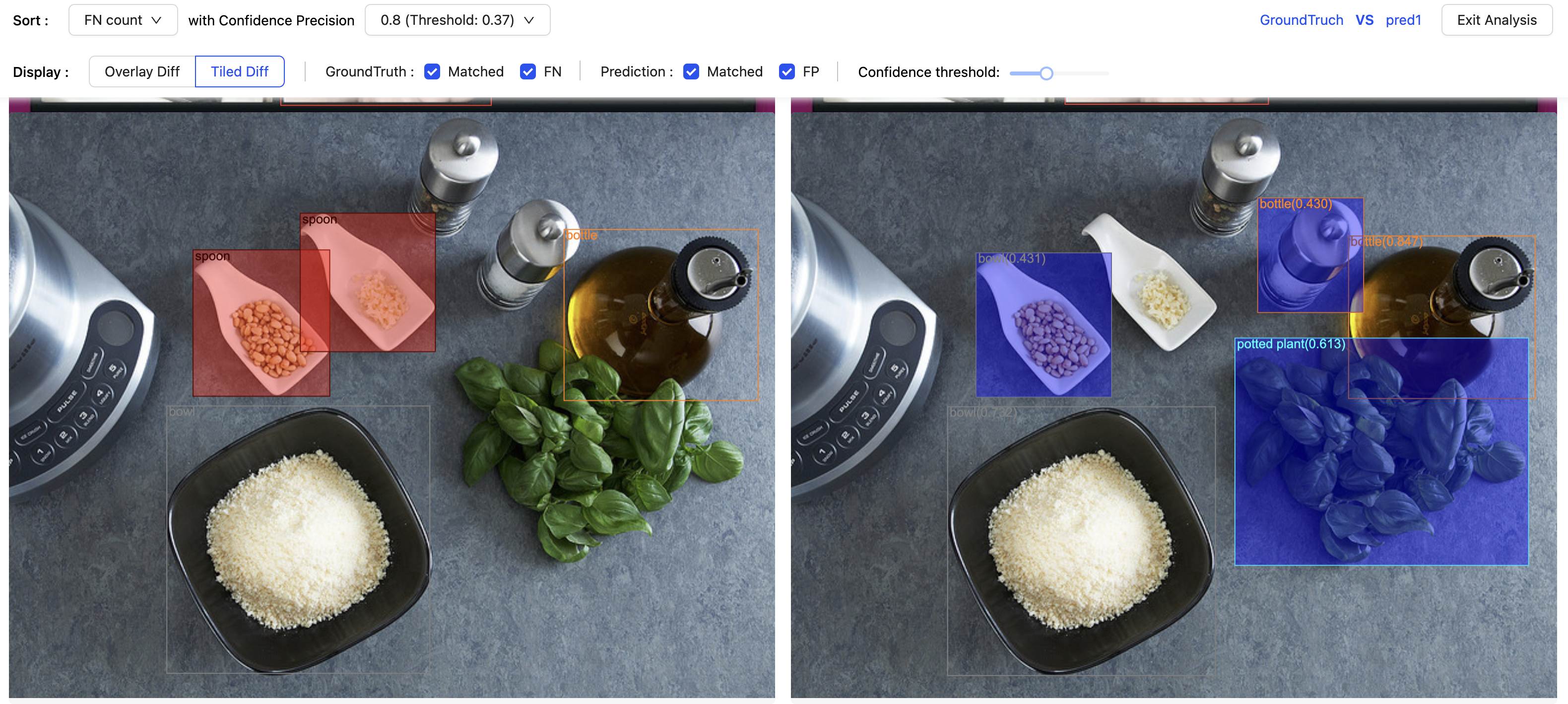

How to understand the boxes with different colored masks.

- Red masked box: represents FN, only appears in Ground Truth, indicating missed detection.

- Blue masked box: represents FP, only appears in the predicted results set, indicating false detection.

- Unmasked box: indicates that the predicted results set and Ground Truth match as expected.

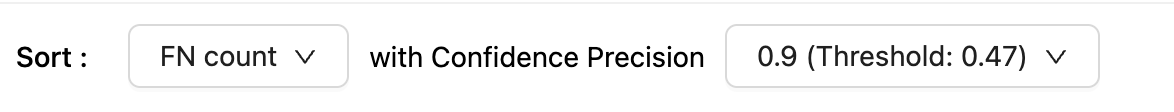

Sorting options

- Support selecting different preset confidence threshold options as the basis for sorting.

- Support sorting images from largest to smallest based on the number of FN or FP calculated from each image.

Display options

- Support two comparison modes: overlay comparison and tiling comparison.

- Support controlling whether to display the boxes that match the predicted results set in Ground Truth, as well as the boxes that represent missed detections in the predicted results set.

- Support controlling whether to display the boxes that match Ground Truth in the predicted results set, as well as the boxes that represent false detections in the predicted results set.

- "Support dynamically displaying the corresponding calculation results under different confidence threshold options for the current sorting condition.

More demonstrations are shown below: